UPDATE 23/12/2019: There has much been done since this post originally, Sifon is now working on remote machines as well as it supports Sitecore Commerce. The main news, however, is that it now got a website with documentation, demos, roadmap, and other project-related items. Thus, it would be best to refer to it - https://Sifon.UK.

Do you love PowerShell as much as I do?

Along the time, Sitecore gains more and more new features, its architecture obtains some existing features split out into microservices. Maintenance becomes even more complicated and time-consuming. Just as an example, a few years back in time we had only 3 databases coming with the platform, while now there are more than 15.

Luckily, PowerShell as a scripting technology comes us with assistance, providing the console-based API to most of the OS and platform management features. Now it becomes possible to automate quite complex procedures just by scripting them out. Take a look at Sitecore XP installer for example, or at even depicting the complexity - an installer script for Sitecore Commerce with almost a hundred steps. How long did it take you to run it all? That confirms the statement on how PowerShell became an inevitable part of developers' lives.

But here we come to another problem. The number of scripts we have grows quite quickly, as the number of standard tasks grows. And at some moment I start noticing that something goes wrong with it. Those who read my blog from time to time may have noticed, that I am kind of a productivity maniac, and being such a person, I start thinking and analyzing where the productivity bottlenecks are while working with PowerShell. What do you think they are?

Firstly, scripting means typing. Typing means typos. Typing means forgetting the syntax. Regardless of how one is perfect as a typist, interaction with a keyboard has its own expenses. The good news is that PowerShell addresses these issues by providing interactive help and autocompletion. But it still consumes your time, compared to the UI operations (of course, those with proper UX and well-designed).

Secondly, we have more ready-to-use scripts and we need them all to be stored somewhere. Somewhere on a filesystem, of course. Those smart and best use GitHub gists (they can be also secret, not only public), and since recent ones can have also private GitHub accounts, so what I know few people do is just create private repositories of their entire scripts libraries. The benefits are clear - if these libraries are well organized and properly structured - it gives immediate access to some specific script one may need to find and access. The principal criteria here is time-to-access and the GitHub repo provides that for sure, of course, you may use any other version control hosting providers, subject to velocity.

Thirdly, sensitive information. Scripts call, other scripts, that in turn call another script (modules) that in turn call APIs - that similar nesting we typically have with our OOP languages. The issue comes from those outer-level scripts that contain some sensitive parameters, such as usernames, passwords, secrets, tokens, and similar. You don't store all of the above at external source control hostings, even if that's a private repo, do you? If you do, I can recommend you a few really worthy courses on information security. Of course, a developer can parametrize all sensitive information and bubble it up to an external script, so that it comes to input parameters. But then we face a bottleneck issue I described first. Not just one has to provide parameters but need to type them manually and to store the values somewhere, which turns in a payload of accessing those values. Still not good.

In any case, the reality is that plenty of such scripts are bloated across the hard drive, which still comes to all the issues described above.

Finally, when it comes to sharing those puter scripts, we meet the same story of separating sensitive data and environmental-specific values from pure business logic.

Of course, developers do value their time and try to find compromises. I am not exclusive and decided to go my own developer's way, which you may guess means developing something bespoke. So please welcome Sifon - a must-have tool for any Sitecore developer, to simplify most of your day-to-day DevOps activities.

But before going ahead - a bit of intro. Without a deep understanding of all the requirements, I still made an early prototype of a given tool to address some of my problems somewhere back in November 2018. Greatly impressed by Sitecore Instance Manager (also known as SIM) I decided to return a graphical installer to Sitecore, which was missing out by those days. That of course was achieved, however, the most valuable feature was the ability to back up the entire Sitecore instance state, clean up the web folder and databases and restore it back from a folder. All that in just a minute time, which seemed to be nothing comparing even to installation with SIF. Something extremely helpful, when you develop with Helix principles and often need to create rollback points before trying some complicated PoCs or for example to a clean just installed version of Sitecore, in order to perform deployment on a clean instance.

That software start saving my time significantly, right from the moment of its creation! Later I added a few more frequently used functions into it, such as installing modules, Solr, rebuilding indexes and full republish, adding HTTP bindings to an instance, and moving its SSL certificates into trusted storage. I shared this PoC with a few of my colleagues working on the same project saving their time as well.

But I could not share it with the entire Sitecore community, to my regret. Firstly, due to unstable code coming out of PoC sitting on top of another PoC and so on, turning Proof of Concept into the Proof of Hell. Secondly not just a project architecture was put under a big question mark, but also the whole concept should be revised.

I wanted it not just to be well maintainable, but extendable. Saying that I mean the ability of each individual working with this program to easily create their own plugins and extensions, addressing some specific task that is shareable or publically open-sourced. Multiplied by Sitecore community efforts that may become an unprecedently helpful tool for all of us, I know you folks are very creative!

So once again, please welcome Sifon. But first of all, what the word Sifon means?

Sifon is a simplified casual term for a whipping siphon that is used to create carbonated water, often with some fancy tastes. It requires cartridges of gas, also known as chargers, to pressurize the chamber holding the liquid. That very roughly explains the architecture of Sifon tool I am presenting to you today.

In my case, Sifon is a nicely developed PowerShell host with numerous features that allow "playing" any custom PowerShell or C# code in form of plugins. That comes as a curious metaphor - the host itself serves as a siphon body, where plugins stand as an analog for charger cartridges. The main screen looks quite minimalistic:

Features:

- stores credentials and environmental parameters in one place called profile, passing them as the parameters into the code

- supports multiple profiles for many environments with immediate switching between them by one click

- "backup-remove-restore" routine comes as built-in and is available immediately after setting up the first profile

- profile editor allows the testing connection few basic parameters auto detections but will be extended with more features

- runs in multithread, responsively updating the progress along with the rest of the corresponding feedback

From the feature list, as for a user, it offers these benefits:

- keep all your frequently used scripts at the same place, organizing them by nested folders as preferred

- separate scripts from sensitive parameters, allowing sharing and publishing them open source

- because of the above, one may create own repository and clone scripts directly into Sifon's plugins directory

NOTE! Before going ahead, please keep in mind that currently that is just a demo beta, so everything may be subject to changes, especially when it comes to UI. Also, the entire validation layer is disconnected, so please submit data responsibly!

The first thing one needs to do after installation is to set up a profile. As I mentioned above, a profile is an easy-to-switch-between set of parameters applicable to a specific environment. As simple, these parameters from a currently selected profile will be passed over to any script executed, regardless of whether that passed into a built-in feature, PowerShell script, or DLL plugin. It is expected that both mentioned are accepting these parameters, so please refer to the provided example in order to build your own plugins.

Important! In order for Sifon to function, you must create at least one profile that has a SQL Server connection set up. Otherwise, most of its functions will be greyed out, until required profile data is submitted.

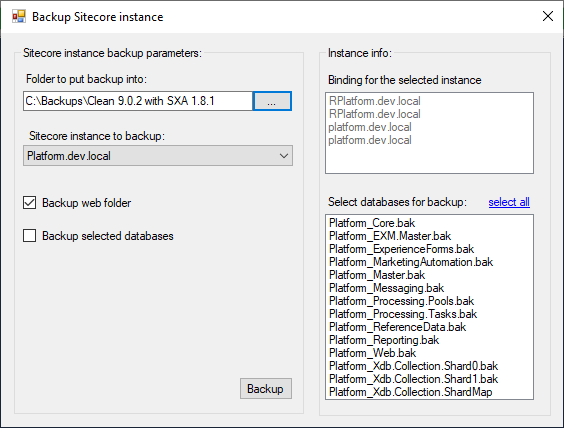

Now, let's take a look at Sifon's built-in "backup - remove - restore" routine. Making backup comes as easy as:

All you need to do is specify the folder to put the backups into, select which instance to backup (you may have many) and decide whether you want to backup databases (select which exactly), web folder, or both.

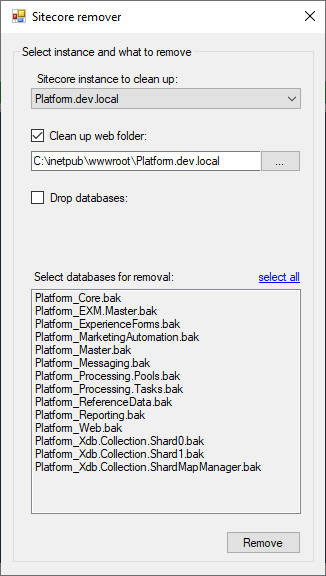

As you might know, it is always better to deploy Helix-based solutions into a clean environment, so you may want to clean the existing webroot before restoring it into that folder. It is done in a similar manner - from dropdown select which instance to remove and it will prompt you with a webroot folder and installed databases. You just need to check/uncheck what you'd like to remove. Please note that databases can be deleted individually, and by selecting "manual entry" you can list all the databases from all the instances to be selected for removal.

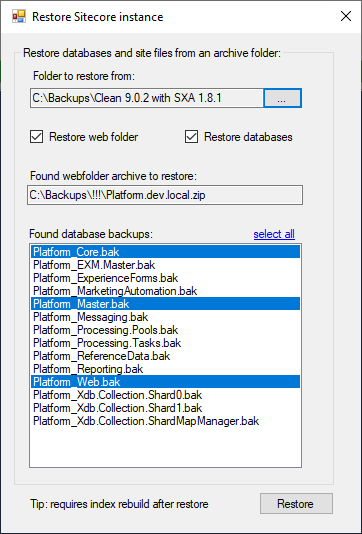

Not to say that restoring looks and also works similar to mentioned above. Simply select a folder with a corresponding backup and select what to restore. If the folder contains only webroot or just databases, you'll be able to restore only what you've got.

Creating a capsule plugin requires you to write PowerShell in a specific manner, but the two important points to mention are:

1. accepting parameters. Just attach this to the top of your script, and you'll get these parameters auto-provided

param(

#Comes from profile

[string]$ServerInstance,

[string]$Username,

[string]$Password,

[string]$instanceName,

[string]$instanceFolder,

)

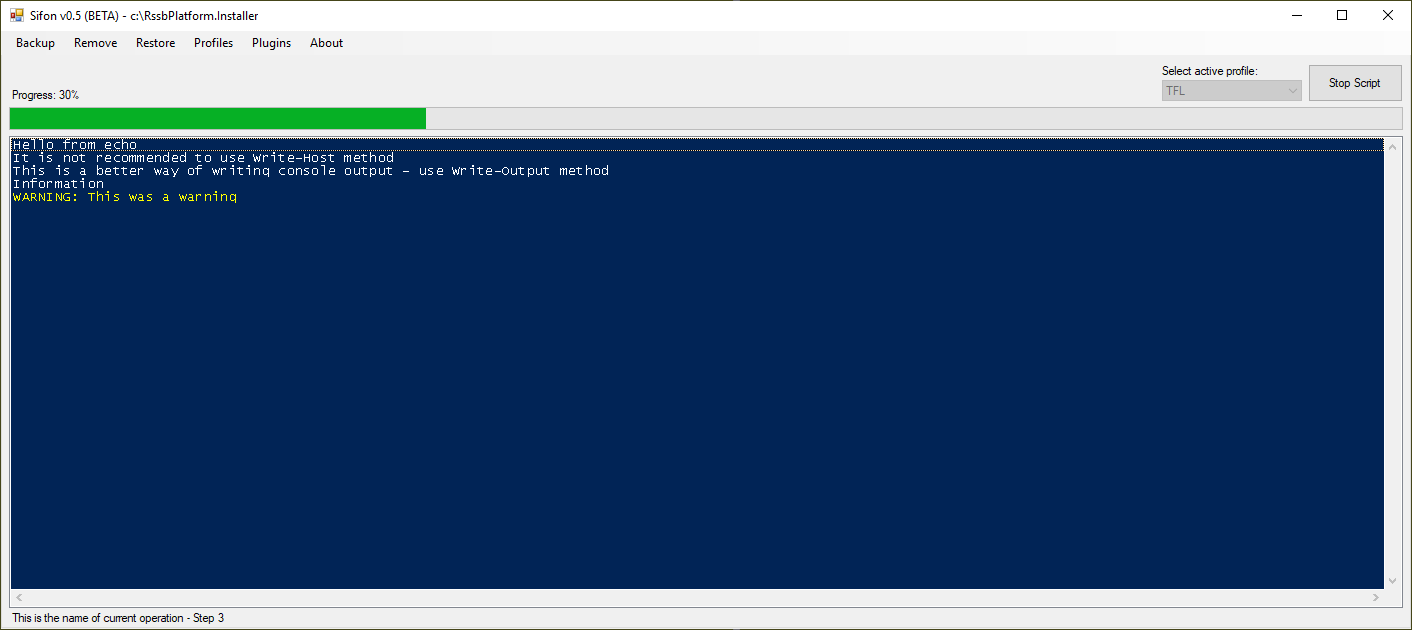

2. reporting the progress by write-progress. This is done by the following syntax

Write-Progress -Activity "This is the name of current operation" -CurrentOperation "Step 1" -PercentComplete 10

start-sleep -milli 500

Write-Progress -Activity "This is the name of current operation" -CurrentOperation "Step 2" -PercentComplete 20

You may run test-progress.ps1 script and review its code as it demonstrates how progress output works with PowerShell

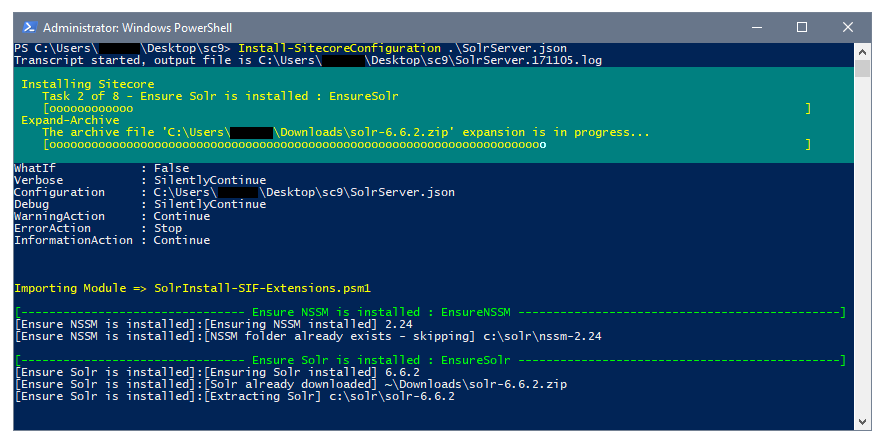

Have you ever noticed a light-blue progress bar when installing Sitecore with SIF (as below)?

That's it! It also means plenty of SIF functions can be converted to work with Sifon and will support displaying progress natively. Few of them will be provided upon the release.

List of plugins (capsules) that will be provided upon release through GitHub:

- all types of package installation: traditional, update-packages and web deploy WDP modules

- creating an SSL certificate and adding it to the trusted storage

- enable SPE remoting for a selected instance and further execution of plugins in their context

- install Solr service, both as single and in-parallel running to already existing service (ie. various versions)

This is the first publically released beta and it is downloadable from this blog post. In the future, it will be distributed from own website along with the documentation, but there will be an easier way of installing it:

choco install sifon

Future plans

- execute PowerShell scripts in the context of a remote machine by WSMAN (that's already supported, but no UI for it yet)

- add support microservices of Sitecore, such as xConnect, Identity Server, and Publishing Service

- in addition to the above, add full support for Commerce, its microservices, and databases

- be fully compliant with Sitecore 9.2, including official installer script (SIF script coming out of the box)

- extend custom OutputFormatters to plugins, allowing presenting more friendly console output (currently works at Sifon itself)

- community plugins available through the GitHub repository

- once becomes matured, releasing Sifon itself open source

You can download the beta by clicking this link (please download from Sifon website instead).

It will already be able to do backups and restore your instances. The samples of capsule plugins are also available through this GitHub. Just compile DLL or drop PowerShell into Scripts folder to get them available via the menu.

Hope you enjoy that and looking forward to your feedback.