INTRODUCTION

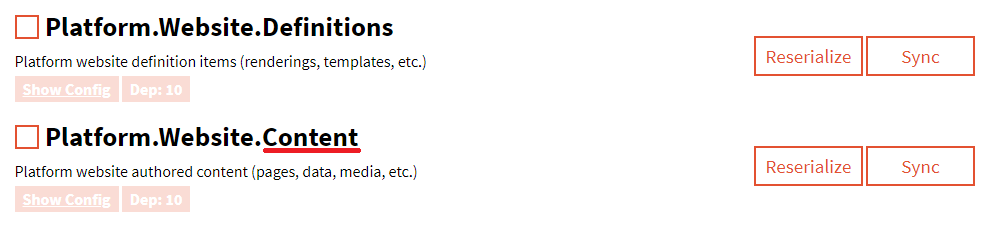

I assume you are following good practices and develop Helix-powered websites (or SXA, that follows Helix by definition). In that case, you do separate actual user-generated content items from definition items, normally shipped along with each release and created by developers (if not, then please refer to an article I've previously written on that), so you end up having a Unicorn configuration that stores all of your author-edited content:

Will are going to split the process into 3 major steps

- Re-serialize Unicorn configuration for content items

- Archive serialized content

- Upload an archive into Azure Blob Storage

IMPLEMENTATION

1. Re-serialize Unicorn configuration for content items. Luckily, Unicorn provides us with MicroCHAP.dll library helping to automate the sync process as a part of your deployment process (note that the DLL and corresponding PowerShell module Unicorn.psm1 should be referenced from your code). The good news is that any verb can be passed to it, not just sync, so one can use 'Reserialize'. That call will look like:

$ErrorActionPreference = 'Stop'

$ScriptPath = Split-Path $MyInvocation.MyCommand.Path

Import-Module $ScriptPath\Unicorn.psm1

Sync-Unicorn -Verb 'Reserialize' -Configurations @('Platform.Website.Content') -ControlPanelUrl 'https://platform.dev.local/unicorn.aspx' -SharedSecret '$ecReT!'

2. Archiving serialized content would be the next step. If you click Show Config button for Platform.Website.Content configuration in Unicorn configuration page, you may find all the relevant information about it, including the physical folder where items are serialized. We need this folder to be archived. The step comes as:

$resource = "Content_$(get-date -f yyyy.MM.dd)"

$archiveFile = "d:\$resource.7z"

$contentFolder = "C:\inetpub\wwwroot\Platform.dev.local\App_Data\serialization\Project\serialization\Content"

7z a -t7z -m0=lzma -mx=9 -mfb=64 -md=32m -ms=on $archiveFile $contentFolder

I am using 7zip as an archiver, as my content is slightly more than 4GBs and traditional zip cannot handle that. As a hidden bonus, I am getting the best compression ratio coming with 7zip. Also, It would be worth checking if a file with such name exists at target and deleting it before archiving, especially if you run the script more often than daily.

3. Uploading to Azure Blob Storage concludes given routine. To make this happen you should have a subscription, and ideally a connection string to your Storage account. Then you may use the following code to achieve the result:

$containerName = "qa-serialization"

$ctx = New-AzureStorageContext -ConnectionString "DefaultEndpointsProtocol=https;AccountName=lab1234;AccountKey=y9CFAKE6PQYYf/vVDSFAKEzxOgl/RFv03PwAgcj8K80mSfQFDojdnKfakeaLMva0S9DbrQTzNjDMdGCp7rseRw==;EndpointSuffix=core.windows.net"

Set-AzureStorageBlobContent -File $archiveFile -Container $containerName -Blob $resource -Force -Context $ctx

Also, it is assumed you already have blob container created, if not you need to create it upfront:

New-AzureStorageContainer -Name $containerName -Context $ctx -Permission blob

Optionally, you may want to delete the temporal archive, once it's uploaded to blob storage.

RUNNING

Here's an entire code that works for me:

# Step 1: re-serialize user-generated content

$ErrorActionPreference = 'Stop'

$ScriptPath = Split-Path $MyInvocation.MyCommand.Path

Import-Module $ScriptPath\Unicorn.psm1

Sync-Unicorn -ControlPanelUrl 'https://platform.dev.local/unicorn.aspx' -Configurations @('Platform.Website.Content') -Verb 'Reserialize' -SharedSecret '$ecReT!'

# Step 2: archive serialized user-generated content with 7zip using best compression

$resource = "Content_$(get-date -f yyyy.MM.dd)"

$archiveFile = "d:\$resource.7z"

$contentFolder = "C:\inetpub\wwwroot\Platform.dev.local\App_Data\serialization\Project\serialization\Content"

7z a -t7z -m0=lzma -mx=9 -mfb=64 -md=32m -ms=on $archiveFile $contentFolder

# Step 3: upload generated content into Azure Blob Storage

$containerName = "qa-serialization"

$ctx = New-AzureStorageContext -ConnectionString "DefaultEndpointsProtocol=https;AccountName=lab1234;AccountKey=y9CFAKE6PQYYf/vVDSFAKEzxOgl/RFv03PwAgcj8K80mSfQFDojdnKfakeaLMva0S9DbrQTzNjDMdGCp7rseRw==;EndpointSuffix=core.windows.net"

Set-AzureStorageBlobContent -File $archiveFile -Container $containerName -Blob $resource -Force -Context $ctx

#Step 4: clean-up after yourself

Remove-Item $archiveFile -Force

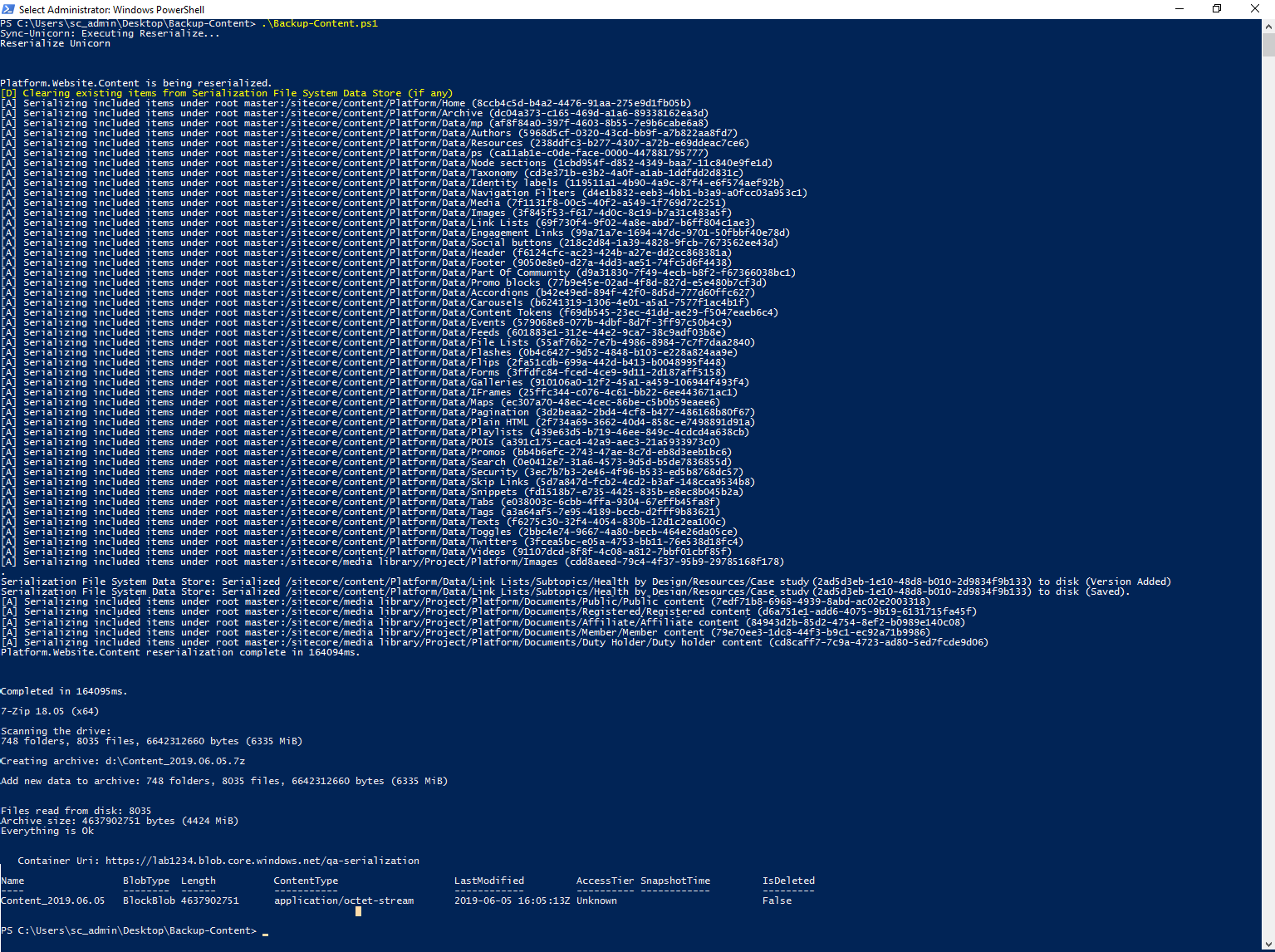

I run it on a daily basis by Windows Task Scheduler in order to get a daily snapshot of editors' activity. The script produces the following output:

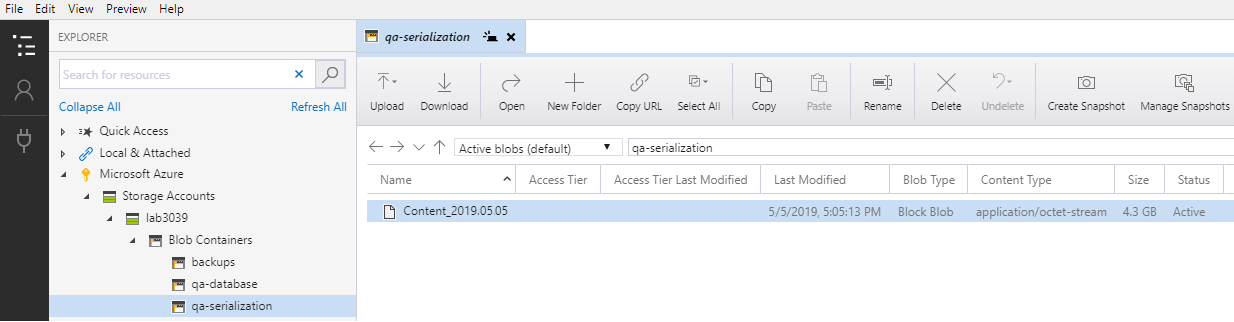

As a result of running script, I get an archive appearing in the Azure Blob Storage:

RESTORING

There's no sense in making backups unless you confirm restoring the data out of it works well. For content items, download an archive, restore and substitute content serialization folder with what you've extracted, then sync content configuration. As simple as that!

Note that your content should be aligned with the definition items, or it may not work well!

Hope this post helps!