There was much of recent buzz around containers as technology and Docker in particular, especially now where more and more community efforts focused at Docker in conjunction with Sitecore. Plenty of articles do explain how it works on the very top level, what the benefits are but very rare do precise guidance. As for an ultimate beginner - I know how it is important to get a quick start, the minimal positive experience as a further point of development. This blog exactly about how to achieve the bare minimal Sitecore running in Docker.

In order to operate the repository for given walk-through, you need to have Windows 10 x64 with at least build 1809.

Once done, you'll need to switch a mode. Docker for Windows can work in either Windows or Linux mode at the same time - which means you cannot mix types of containers.

One of the biggest issues at the moment is the size of Windows base images - the minimal Nano Server with almost everything cut off is already 0.5Gb and Server Core (this one either does not have UI - just a console) goes to 4Gb. That's too much comparing to minimal Linux images starting from as little as 5 megs. That's why it may seem very attractive to run Solr from Linux image (as both Solr and Java it requires are cross-platform and there are ready-to-use images there), same for Ms SQL Server which also has been ported on Linux and its images are also available.

Until the very-very recent the short answer was no - one could manage only single type of container at a time (while already running Linux container will keep up running unmanageable, there are also few workarounds how to make them work in parallel, but that's out of scope for now), but as from April 2019 it is doable (from Linux mode on Windows), I managed to combine NGINX on Linux with IIS on Windows.

Switching mode from UI is done by right-clicking Docker icon context menu from a system tray:

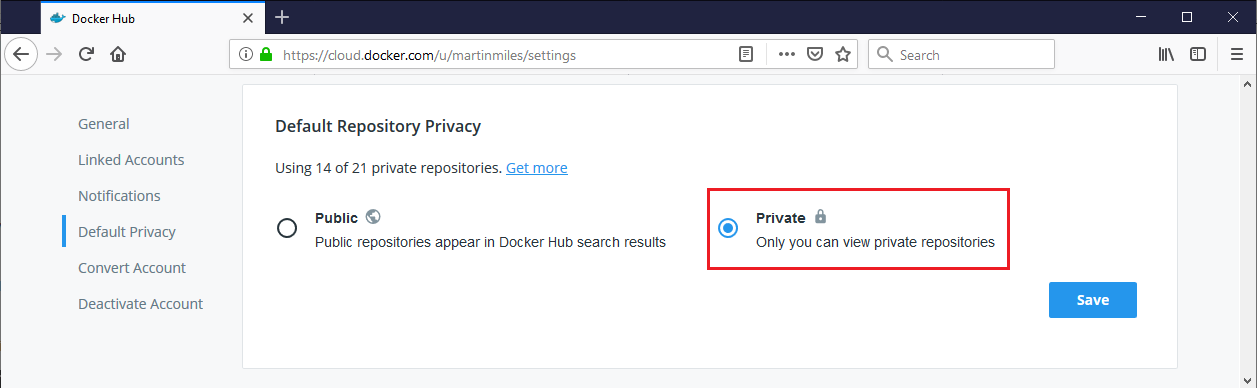

Docker Hub however allows only one private repository for free. You need to ensure sure all your repositories are private. Images you're building will contain your license file, having them in a public access will also be treated as sharing Sitecore binaries on your own, which you're not allowed - only Sitecore can distribute that publicly.

Alternatively, you may consider Canister project, they give up to 20 private repositories for free.

Pluralsight has a course on how to implement your own self-hosted docker registry.

But what is even more surprising, Docker itself provides a docker image with Docker Registry for storing and distributing Docker images.

4. Preparing images

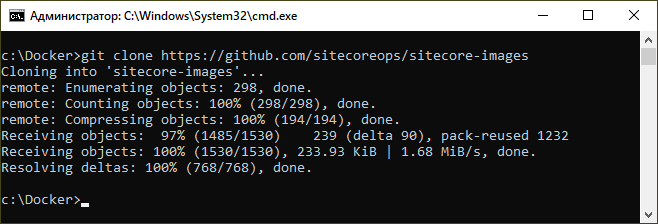

Now let's clone Sitecore images repository from GitHub - https://github.com/sitecoreops/sitecore-images

If you don't have git installed - use Chocolatey tool already familiar from previous steps: cinst git (once complete - you'll need to reopen console window so that PATH variable gets updated).

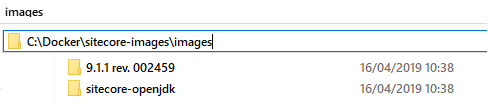

To keep things minimal, I go to sitecore-images\images folder and delete everything unwanted - as for this demo, I keep only 9.1.1 images, removing the rest. So, I left only 9.1.1 images and sitecore-openjdk required for Solr:

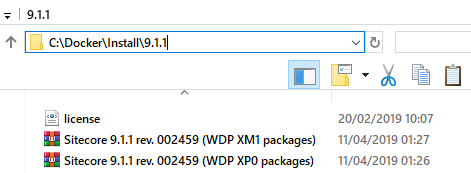

Images folder contains instructions on how to build you new Sitecore images. As input data they require Sitecore installers and license file, so put them into this folder:

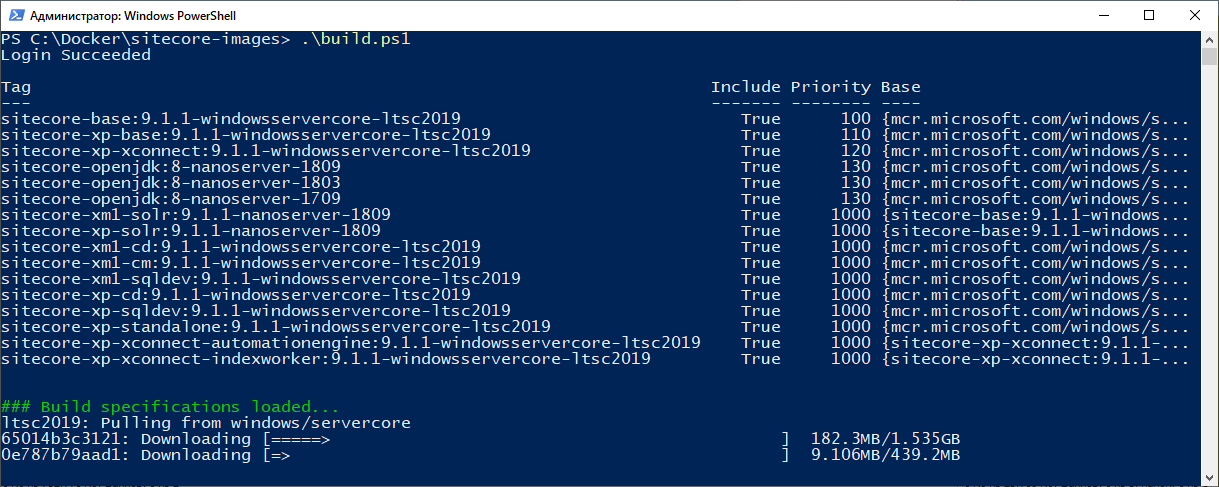

And the last but not the least, create build.ps1 PowerShell script.

Important: do not use an email as the username, it's not your email (in my case username is martinmiles), and from what I've heard many people find this confusing and were wondering why getting some errors.

This is my build script, replace usernames and password and you're good to go:

"YOUR_DOCKER_REGISTRY_PASSWORD" | docker login --username martinmiles --password-stdin

# Load module

Import-Module (Join-Path $PSScriptRoot "\modules\SitecoreImageBuilder") -Force

# Build and push

SitecoreImageBuilder\Invoke-Build `

-Path (Join-Path $PSScriptRoot "\images") `

-InstallSourcePath "c:\Docker\Install\9.1.1" `

-Registry "martinmiles" `

-Tags "*" `

-PushMode "WhenChanged"

5. Building images

Run the build script. If receiving security errors, you may also be required to change execution policy prior to running build:

set-executionpolicy unrestricted

Finally you get your base images downloading and build process working:

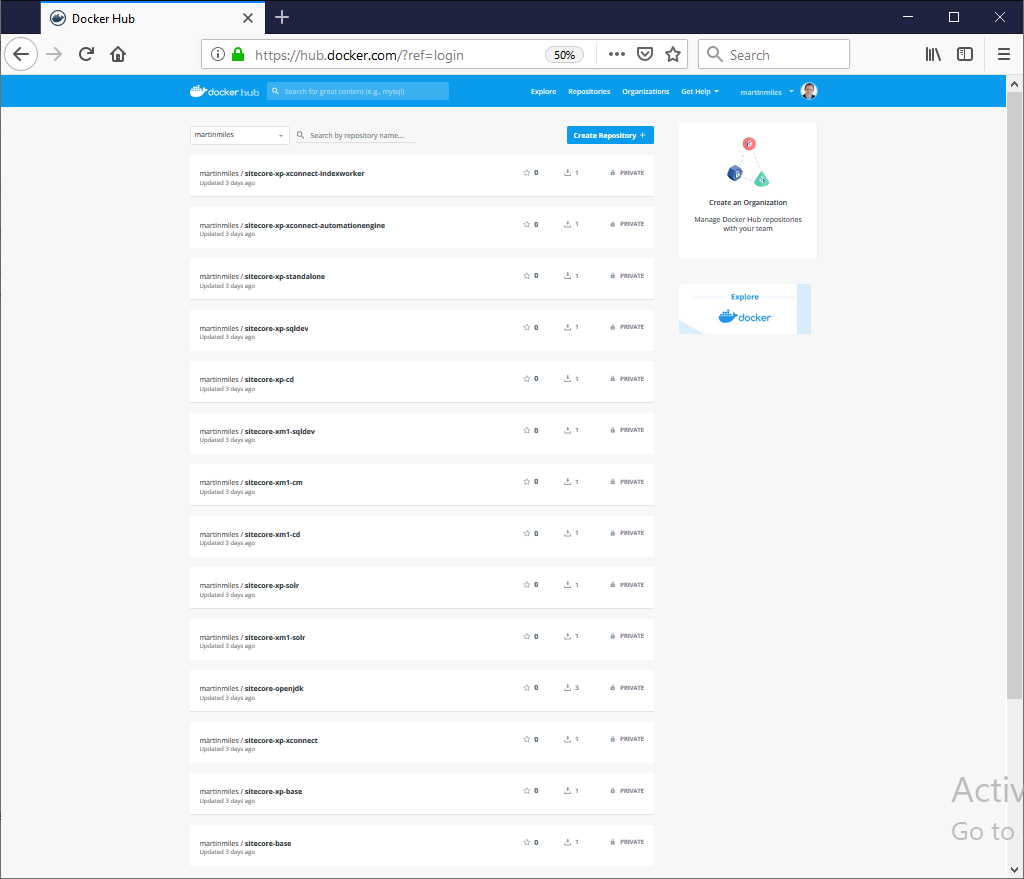

As I said, the script pulls all the base images and builds, as scripted. Please be patient as it may take a while. Once built, your images will be pushed to the registry. Here's what I finally got - 15 images built and pushed to Docker Hub. Again, please pay attention to Private badge next to each repository:

Docker Hub has a corresponding setting for defining privacy defaults:

6. Running containers

Images are built and pushed to registry, so we are OK to run them now. Navigate to tests\9.1.1 rev. 002459\ltsc2019 folder where you see two docker-compose files - one for XM topology and another for XP. If simplified, docker compose is a configuration for running multiple containers together defining common virtual infrastructure, it is written in YAML format.

Since we are going the simplest route - we keep with XM topology, but that same principle works well for anything else.

Rename docker-compose.xm.yml to docker-compose.yml and open it in the editor. What you see is a declarative YAML syntax of how containers will start and interact with each other.

version: '2.4'

services:

sql:

image: sitecore-xm1-sqldev:9.1.1-windowsservercore-ltsc2019

volumes:

- .\data\sql:C:\Data

mem_limit: 2GB

isolation: hyperv

ports:

- "44010:1433"

solr:

image: sitecore-xm1-solr:9.1.1-nanoserver-1809

volumes:

- .\data\solr:C:\Data

mem_limit: 1GB

isolation: hyperv

ports:

- "44011:8983"

cd:

image: sitecore-xm1-cd:9.1.1-windowsservercore-ltsc2019

volumes:

- .\data\cd:C:\inetpub\sc\App_Data\logs

isolation: hyperv

ports:

- "44002:80"

links:

- sql

- solr

cm:

image: sitecore-xm1-cm:9.1.1-windowsservercore-ltsc2019

volumes:

- .\data\cm:C:\inetpub\sc\App_Data\logs

isolation: hyperv

ports:

- "44001:80"

links:

- sql

- solr

If your docker-compose has isolation set to process, please change it to hyperv (this is mandatory hosts on Windows 10, while on Windows Server docker can also run its process natively). In that case, processes will run in a hypervisor and is not a naked process next to native windows processes, that prevents you from memory allocations errors such as PAGE_FAULT_IN_NONPAGED_AREA and TERMINAL_SERVER_DRIVER_MADE_INCORRECT_MEMORY_REFERENCE

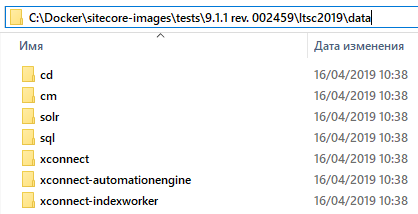

Notice data folder? This is how volumes work in docker. All these folders within data are created on your host OS file system - upon creation, a folder from container is mapped to a folder on a host system, and once container terminates, data still remains persistent on a host drive.

For example, one running SQL Server in docker can place and reference SQL database files (*.mdf and *.ldf files) on a volume externally in such a manner so that databases actually exist on a host OS and will not be re-created on each container run.

My data folder already has data folders mapped to data folders from all the various roles' containers run on previous executions (yours will be blank at that moment before the first run):

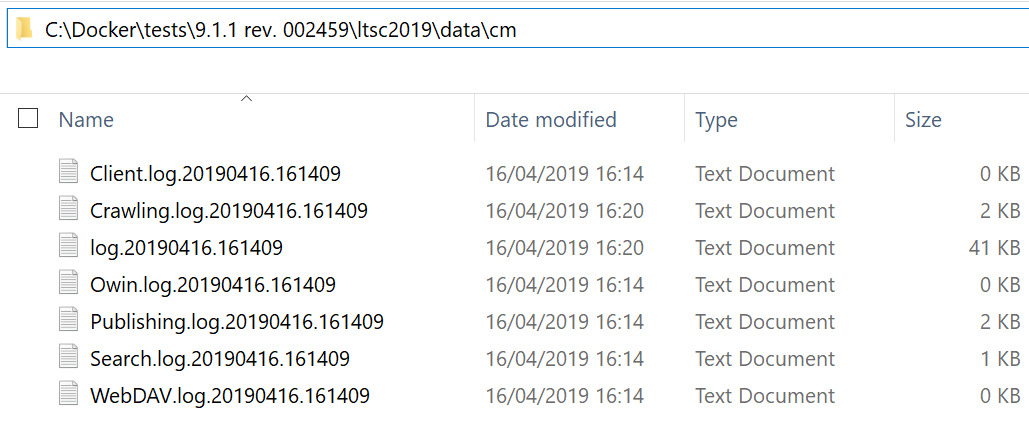

Just for a curiosity, below is an example of what you can find within cm folder, looks familiar, right?

Anyway, we are ready to run docker-compose:

docker-compose up

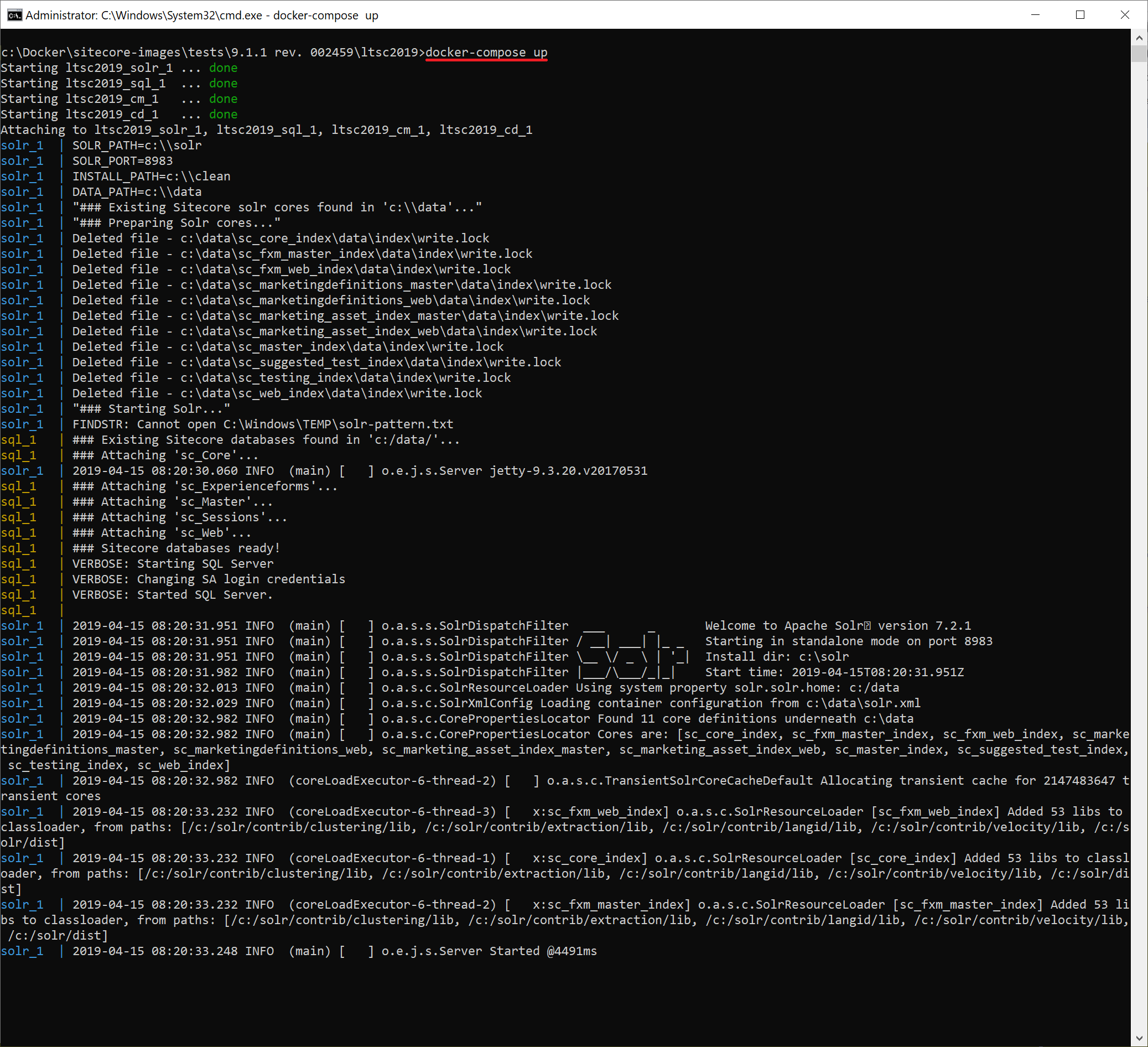

You'll then see 4 containers being created, then Solr container starts making its job, providing plenty of output:

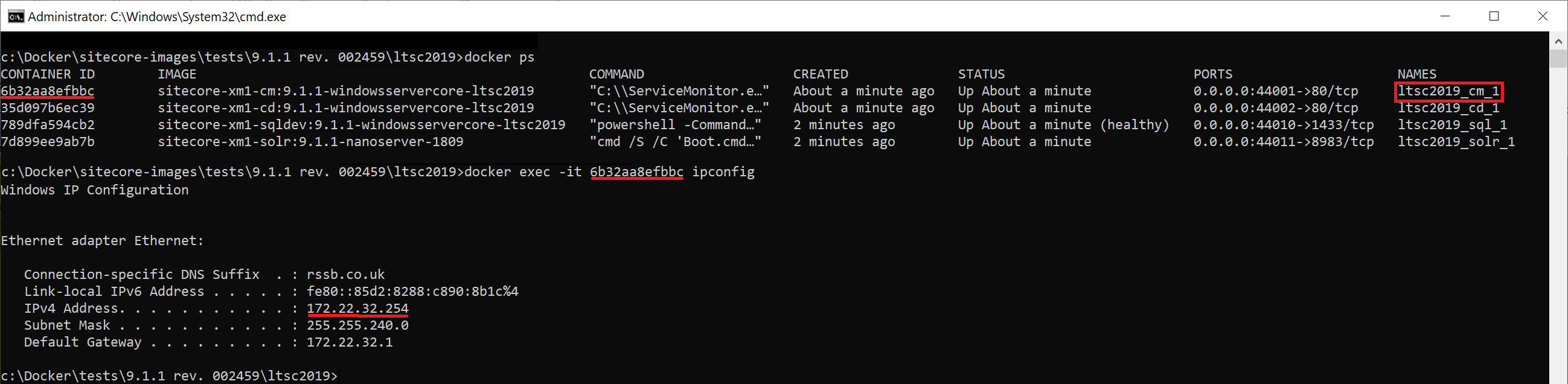

In a minute you'll be able to use these containers. In order to log into Sitecore, one needs to know an IP address of a container running a particular role - so need to refer to a cm container. Every container has its hash value which serves as an identifier, so with docker ps command you can list all docker containers currently running, get hash of cm and execute ipconfig command within a context of that cm container (so that ipconfig runs inside of it internally):

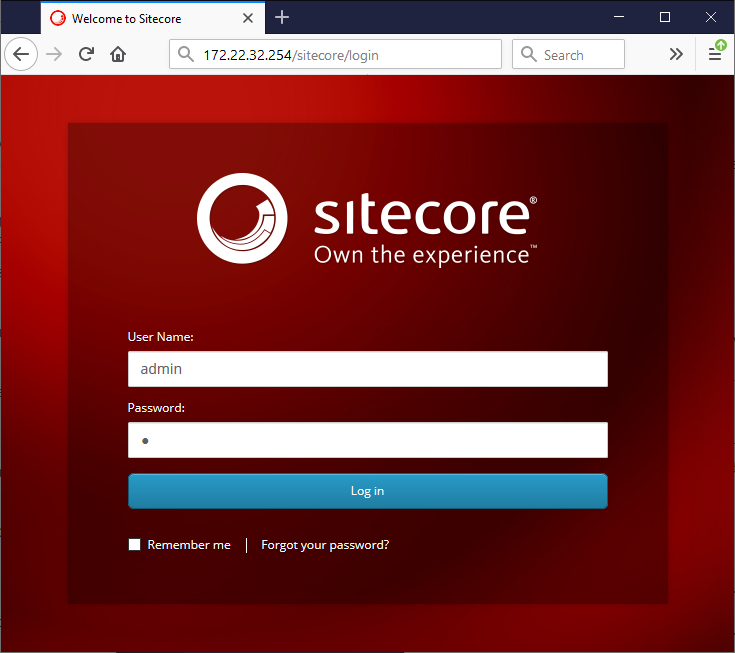

Now I can call 172.22.32.254/sitecore in order to login to CMS:

What else can you do?

With docker you may also execute commands in interactive mode (sample) with -it switch, so you may do all the things such as deploying your code there (it is always good to deploy on top of clean Sitecore instance). That's how to enter an interactive session with remote command prompt:

docker exec -it CONTAINER_HASH cmd

You may go with more folder mappings using volume. Running XP topology offers even more interesting but safe playground for experiments.

Building other versions of Sitecore allows regression testing your code against legacy systems - always quick manner and always on clean! Going ahead you may use it for the development having only Visual Studio running on a host machine, with no IIS and no SQL server installed, publishing from VS directly in docker. Plenty other scenarios possible - it's only for you to choose from.

7. Stopping and clean-up

Stopping containers occurs in a similar way:

docker-compose down

After finished, you won't see any of the containers running by executing

docker ps

You'll be still able to see existing images on a system and their size occupied:

docker image ls

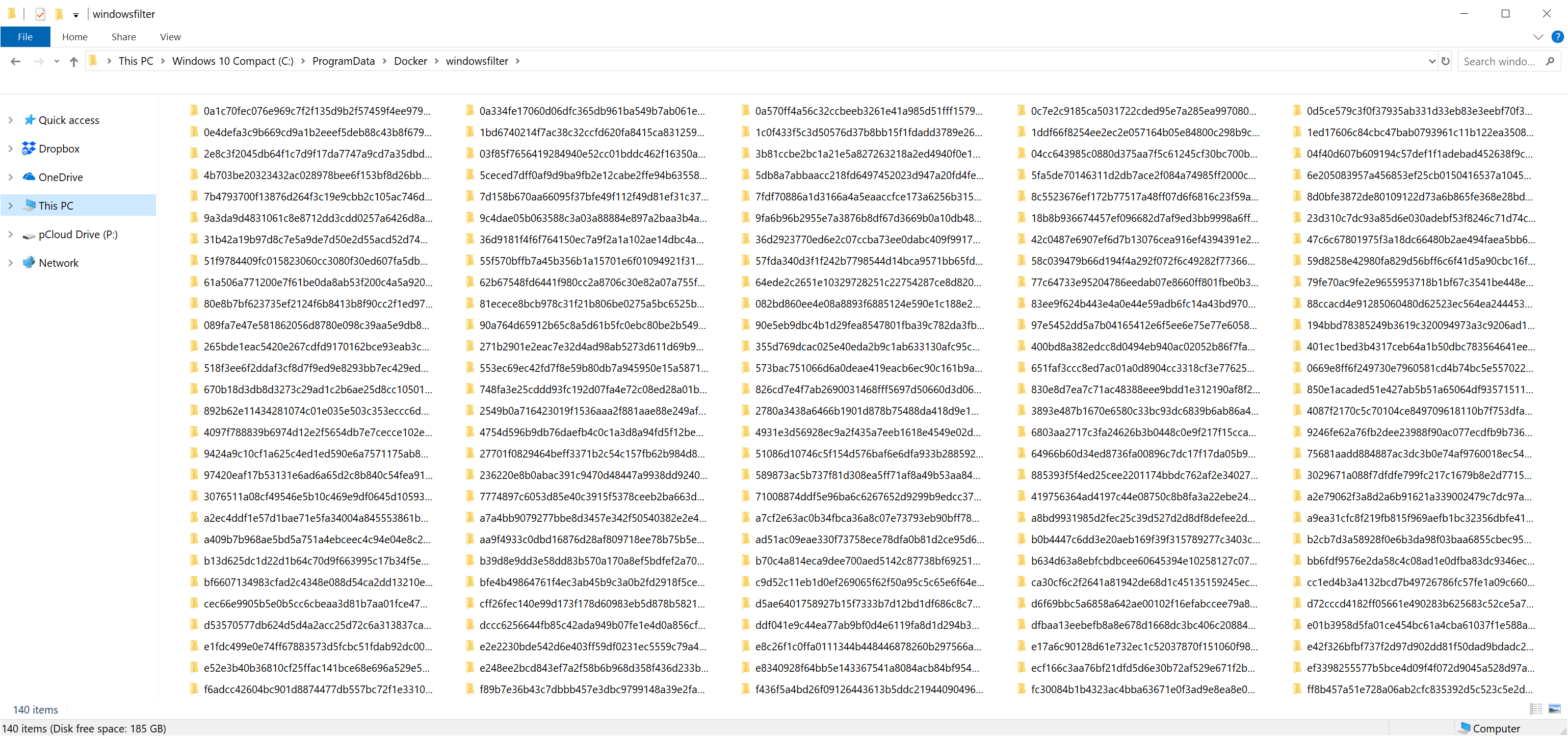

So if finally, after playing it over and want to clean-up you drive a noticed there's way less free disk space now. I want to beware you from doing one more common mistake - don't delete containers data manually! If you navigate to c:\ProgramData\Docker\windowsfilter folder, one can see plenty of them:

These are not container folders, they are symlinks (references) to windows system resources folders - deleting data from these symlinks actually deleted you resources from your host OS bringing you to a sorry state. Instead, use the command:

docker prune -a

This gets rid of all the images and containers from your host system correctly and safely.

8. Afterthoughts

Docker is a very strong and flexible tool, it is great for devops purposes. I personally find questionable using it for production purposes. That may be fine with Linux containers, but as for Windows... I'd rather opt out, for now, however I am aware of people doing that.

Proper use docker will definitely improve your processes, especially combining it with other means of virtualisation. Containers may take you a while to get properly into, but after getting your hands on you'll have your cookbook of docker recipes for plenty of day-to-day tasks.

As for Sitecore world, I do understand it is all only starting yet, but docker with Sitecore becomes more inevitable, as Sitecore drills deeper into microservices. Replacing Solr and SQL Server with Linux-powered images is only a matter of time, and what I am anticipating so much is XP and XC finally running together in Docker, facaded by IDS (ideally also on Linux) just in fractions after calling docker-compose. Fingers crossed for that.

Hope this material serves you as a great starting point for containers and Docker!