... this blog is a next step after Editing content on a CD server. Part 1. MVC ajax request to controller.

Now we got a question on how to implement server logic to store the value. It may seem pretty straightforward for a moment - get item by its ID, update the field and return result. But in fact it's not, due to Sitecore's architectural principles.

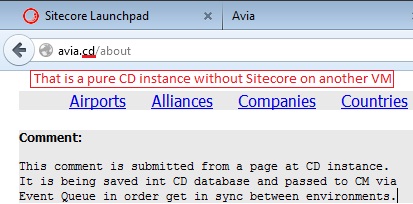

Our page is running on a CD (content delivery server) and the item we're trying to update comes from CD database (traditionally called "web"), so if we edit and update item on "web" database - we'll get into situation when "web" contains updated version, while "master" doesn't. Publishing is one-way process of copying items from CM to CD databases (or simply from "master" to "web") so with next publishing we may overwrite updated item in web with outdated previous version from master. Writing directly to CM database is a violation of architectural principles, while it is technically possible on your developer "default" Sitecore installation, in real world Sitecore CD servers do not keep "master" connection string, making this process impossible. So, what should we do in that case?

Luckily, there are several ways of solving this scenario, each has its own pros and cons, so it is worth of thinking well ahead which (and if) is applicable to your solution.

Solution 1: Allocate a separate database in parallel with web, to store all user editable content. Normal items and non-user content will remain in web database, as normally. Here is the great article describing that approach:

Solution 2: Employ Sitecore Event Queue no notify CM about CD changes, so that as soon CM receives update event, it updates itself with the latest change coming from CD and then re-publishes the change across the rest of CD databases in order to keep them in sync. We describe this approach below.

Sitecore has a mechanism that is called remote events and allows communication between instances. This is implemented via "core" database that has EventQueue table that is monitored by a minor periods of time (like 2 seconds). We always have connection string reference to core database on our CD, at least for authorization / security purpose but also to support Event Queue that servers transport for publishing operations.

So, the process of saving comment on back end now looks fairly complicated - as post request comes - we still save the changes into CD ("web") database, on CM we also add an additional handler to item:saved event that executes custom code with event handler, that updates the same item on master database. And finally, you may programmatically re-publish updated item to other CD instances (if many), that do not have an updated version yet.

Now let's look at the code that implements all described below. AjaxController will include additional code right before returning JSON back to browser:

UpdateCommentEvent evt = new UpdateCommentEvent();

evt.Id = item.ID.ToString();

evt.FieldName = fieldName;

evt.Value = database.GetItem(id).Fields[fieldName].Value;

Sitecore.Eventing.EventManager.QueueEvent<UpdateCommentEvent>(evt);

What we're doing here is just queueing an event. UpdateCommentEvent is a custom event written by us, it is normal C# class, but please pay attention to DataContract and DataMember attributes. This is required for serialization purposes. Here is how UpdateCommentEvent is defined within the code:

And, of course, we define UpdateCommentEventArgs that comes along with our new event:

Implementation on CM side: first of all we need need to specify a new hook.

...

Here's the code referenced by hook specified above. We subscribe to our event and once it arrives - we call Run method that arranges local (for CM environment) event:

We need to specify new local event called updatecomment:remote in the configuration as associate it with a handler method:

And here is OnUpdateCommentRemote event handler that fires on CM. It gets event arguments, casts them to UpdateCommentEventArgs and extracts data out of arguments. In order to update an item on CM we require 3 parameters: Item ID, field name to be updated and the value to be updated with. All three are stored in event arguments so now we are able to update item on master database.

namespace Website.Code.Events

{

public class UpdateCommentEventHandler

{

///

/// The method is the method that you need to implement as you do normally

///

public virtual void OnUpdateCommentRemote(object sender, EventArgs e)

{

if (e is UpdateCommentEventArgs)

{

var args = e as UpdateCommentEventArgs;

var master = Sitecore.Configuration.Factory.GetDatabase("master");

using (new SecurityDisabler())

{

var itemOnMaster = master.GetItem(args.Id);

using (new EditContext(itemOnMaster))

{

itemOnMaster[args.FieldName] = args.Value;

}

}

// also do publishing to other CD here, if required

}

}

// This methos is used to raise the local event

public static void Run(UpdateCommentEvent evt)

{

UpdateCommentEventArgs args = new UpdateCommentEventArgs(evt);

Event.RaiseEvent("updatecomment:remote", new object[] { args });

}

}

}

If there are multiple CD environments (at least one apart from the one where we edit the comment) you will also need to re-publish from CM to those CDs in order to keep them all in sync.

So, in these two blog posts we described how to make an ajax MVC call to controller on back-end server and update user editable content on CD environment keeping it in-sync with other environments. Hope this post helps you to understand Sitecore architecture better.

See also (additional references):

Sitecore.Support.RemoteEventLogging

Tweak to log all item:saved:remote events of EventQueue that were just processed.

https://bitbucket.org/sitecoresupport/sitecore.support.remoteeventlogging/wiki/Home