There are a variety of Reverse Proxy solutions on the market. You may have already heard about some:

Major cloud providers also have their proprietary solutions:

But what is a Reverse Proxy? Why "Reverse"?

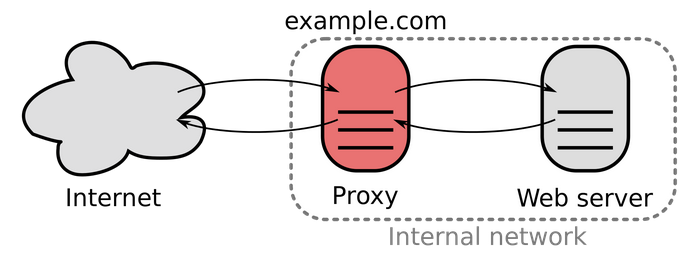

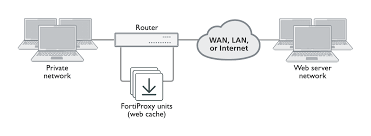

As Wikipedia says, a common type of proxy server that is accessible from the public network. Large websites and content delivery networks use reverse proxies - together with other techniques - to balance the load between internal servers. Reverse proxies can keep a cache of static content, which further reduces the load on these internal servers and the internal network. It is also common for reverse proxies to add features such as compression or TLS encryption to the communication channel between the client and the reverse proxy.

Reverse proxies are typically owned or managed by the web service, and they are accessed by clients from the public internet. In contrast, a forward proxy is typically managed by a client (or their company) who is normally restricted to a private, internal network. The client can, however, access the forward proxy, which then retrieves resources from the public internet on behalf of the client. Here's a reverse proxy in action from a very high:

What are typical scenarios for using a Reverse Proxy?

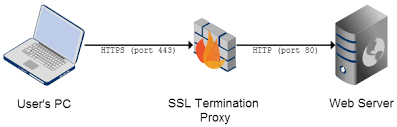

1. SSL Offload. Let's assume we've got a website which works at HTTP only, and for some reason (legacy, gone developers or being unable bringing changes into a running solution that may huge or any other) it is not possible to change the website itself - "If it works, don't touch it" paradigm in action. For compliance, we must add HTTPS support for that website.

With using a Reverse Proxy it comes to a really quick and easiy solution - we don't need developers at all. All we need is asking our Ops professional asking him to instantiate a proxy server with SSL Termination. (obviously, we'll also need SSL certificates for domain hostname(s) of a given website). Job done!

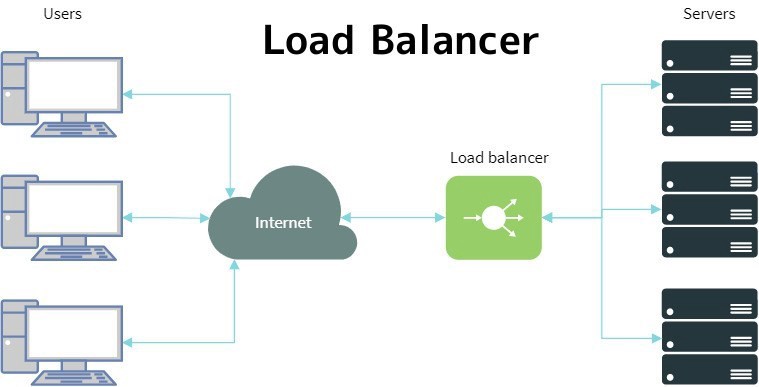

2. Load Balancer. Next, we'd want horizontally scaling that website and even deployed two equal client facing copies of it. How do we "split" traffic to distributing it equally to both sites? In this case we introduce a Proxy Server functioning as a Load Balancer.

But what if one of websited dies or crashes half way down the road? Load Balancer needs somehow to know each of the "boxes" functions well and react the outages by re-distributing traffic to the rest of mchines functioning well. This is traditionally implemented by "pinging" a such called "HealthCheck" URL on each particular box. As soon as one of the healthchecks keeps failing, an alert is raised and the traffic is no longer routed to a faulty box (be careful with sticky sessions!).

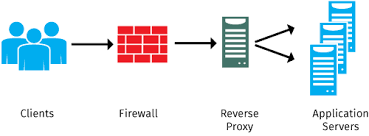

3. Cybersecurity enforcement. Sending specially formed packets hackers can undertake a Deny-of-Service attack, when sending a request comes times cheaper than serving it back. At some moment your servers won't cope with this parasitic workload and will fail.

In order to prevent that, dangerous traffic should not reach your servers, being filtered at a proxy. Namely, a Firewall with an adequate rule set that filters out all patters with anomalies, raises alerts and bypasses the legitimate requests.

4. Caching and compressing. Even with purely legitimate traffic beyond the proxy, one may still get a large request payload. But how comes? Well, there may be different reasons, such as usage patterns where all users navigate to the same heavy-loaded area of a website; alternatively the website itself could be written by junior developers who did not enough care about the way if functions in the most optimal way once deployed. Regardless of the reason, we could still soften things up by identifying some of the popular endpoint that consume much of server's resources and cache it up right at the proxy level. Of course assuming that a given set of parameters always returns that same result, there is no longer a need spending expensive server resources on producing the results we're already got in past and have effectively cached at the proxy level and never reach servers at all. If we however must ensure this traffic reaching the end servers, we could at least compress / encode the "last mile" beyond the proxy.

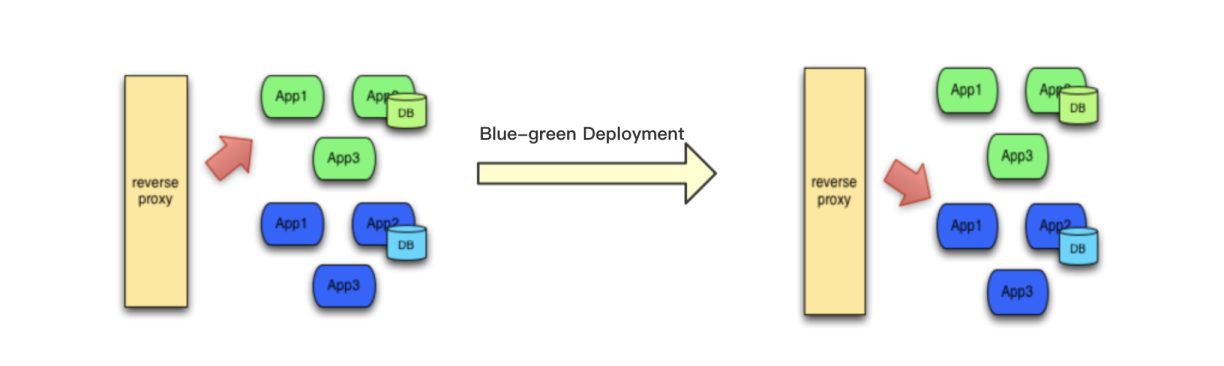

5. Smooth automated deployments? Why not, have you ever heard of Blue-Green Deployments? With that in action end users won't even realize that you're upgrading the solution while they're browsing your site.

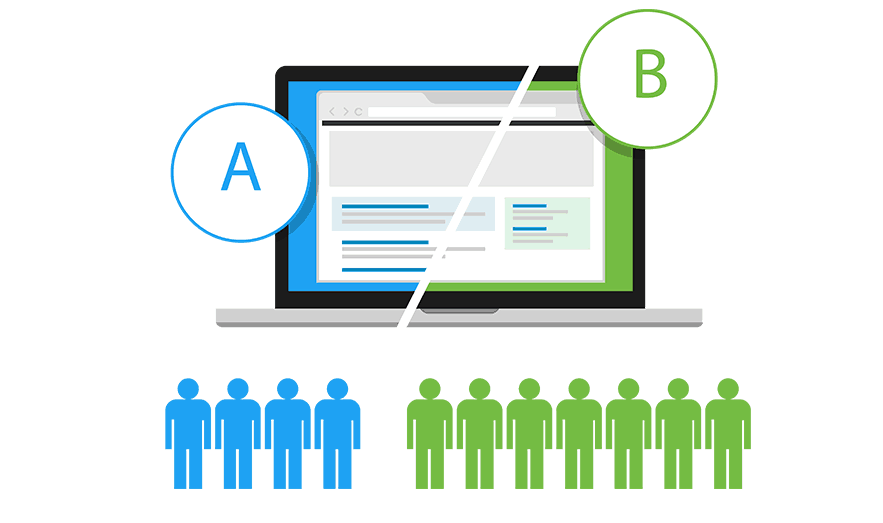

6. A/B Testing. As a result of previous point, it may be a case you've updated some but not the all end servers. You do not want updating them all, instead you'd like to perform an A/B Testing on both sets and based on a result decide to complete an update or rollback to the most recent version. This would be a pretty valid scenario that a reverse proxy can do for you.

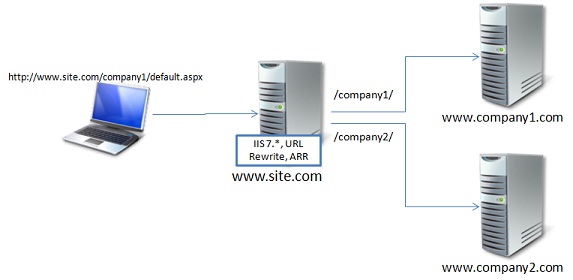

7. URL and Links Rewriting. What if you have a legacy website that functions perfectly well, but similar to a scenario (1) the is no way (and need) of maintaining it. The development team has gone and in any case there is no single reason of investing a lot into a smth to be dismissed at some stage. At the same time you got another website(s) that either could be successor(s) for a legacy one, or some additional areas, written in isolation by more modern tools and thus either incompatible or expensive to merge with an existing solution. However the business wants everything to function with the same main domain name, just in different "folders" under it, so that end users (and search robots!) see no difference between consisting parts and naturally experience them both as being a single solid website.

Achieving that is also possible with a Reverse Proxy by rewriting URLs. Please note that not just a external request coming to site.com/company1 will be rewritten to www.company1.com but also all the internal URLs within all the requested pages need to be rewritten as well. Please note that it becomes possible only in conjunction of SSL offload, otherwise the traffic gets encrypted and proxy becomes "a man in the middle".

Not just that - once 6 years ago I wrote a walkthrough how one can

achieve that same result purely and entirely by the means of IIS on

Windows

Conclusion.

This article give a high-level explanation on Reverse Proxies and their primary features. It intentionally does not focus on a specific implementation avoiding going deeper in technical details.

In real-world solutions of course you will meet Reverse proxy solutions implementing several of the features combined. This may be a typical workflow for processing inbound traffic with a Reverse Proxy:

In any case, I hope you found this helpful!